Nvidia

Signs: An AI-powered platform for learning sign language

( Services )

- AI

- Customer Experience

- Tech & Data

The challenge

For 11 million Deaf and hard-of-hearing individuals in the U.S., access to effective communication is a daily challenge. And for Deaf children, the stakes are even higher. 90% are born to hearing parents who may have little to no experience with sign language. Without early exposure to American Sign Language (ASL), these children risk language deprivation—a condition that can impact cognitive development, education, and emotional well-being.

But learning ASL on your own has always been difficult. While there are incredible teachers, ASL programs, and schools that offer instruction, many people don’t have access to them. Traditional ASL classes can be expensive and geographically limited. Video tutorials exist, but they lack the one thing learners need most: feedback. They don’t tell you when your hand position is slightly off. They don’t help you adjust your movements in real-time. They don’t respond to you.

ASL education needed a revolution—one that could make learning more interactive, more accessible, and more immediate. One that could empower learners, bridge the gap between the Deaf and hearing communities, and make mastering ASL more intuitive for anyone, anywhere.

The solution – Signs

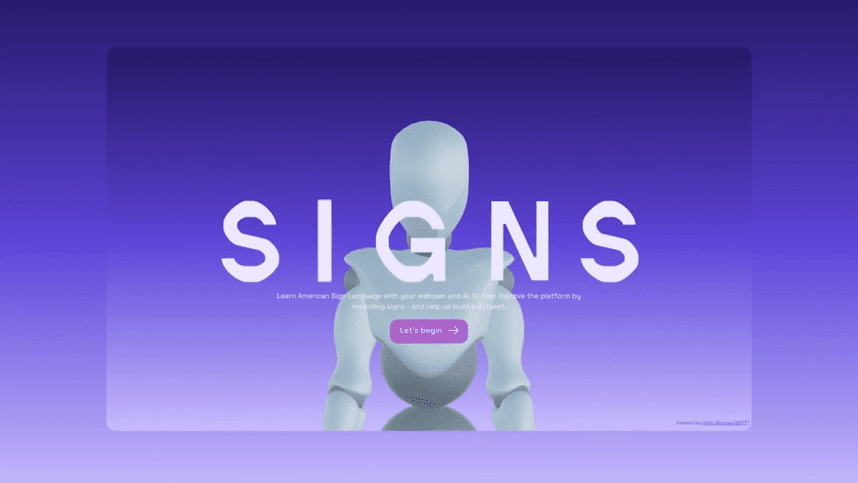

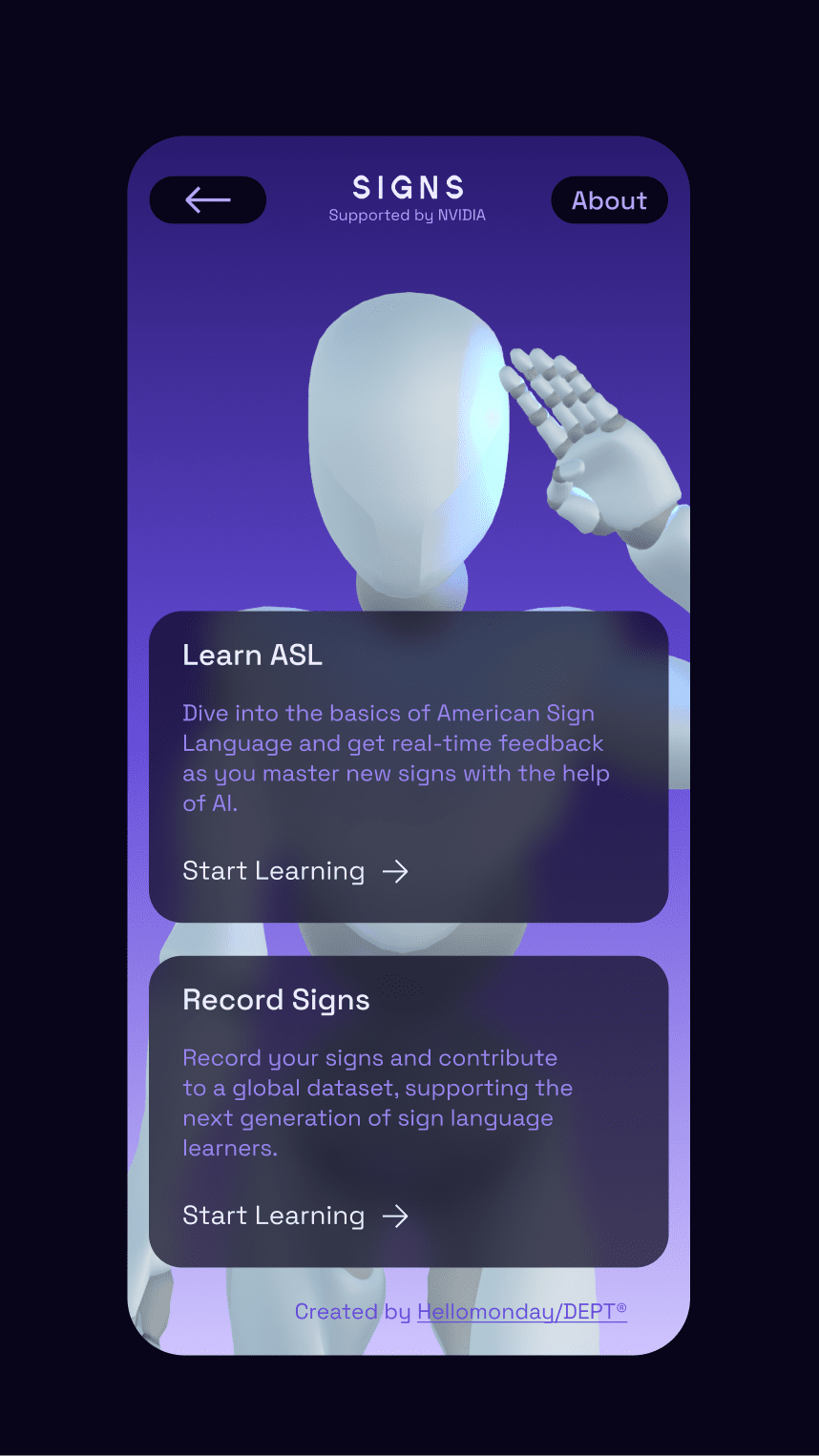

In collaboration with NVIDIA and the American Society for Deaf Children, we created Signs—a first-of-its-kind, AI-powered sign language platform that makes learning ASL as natural as a conversation.

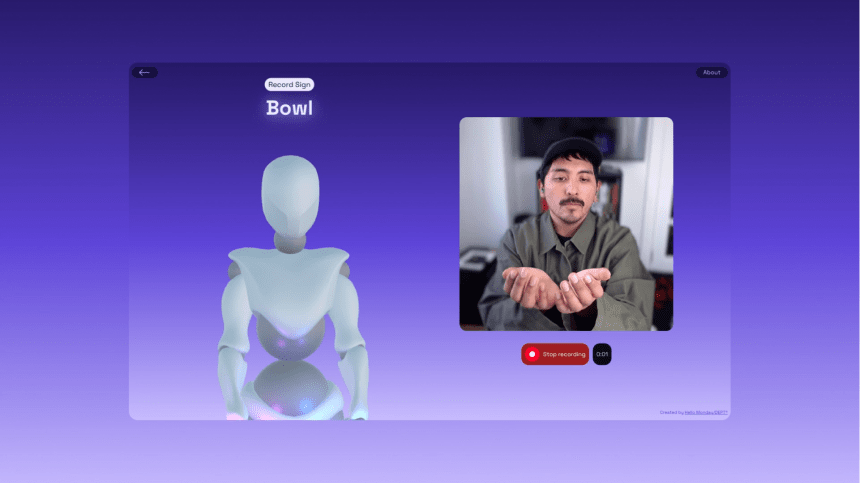

Using cutting-edge AI, computer vision, and machine learning, Signs transforms any camera into an interactive sign language coach.

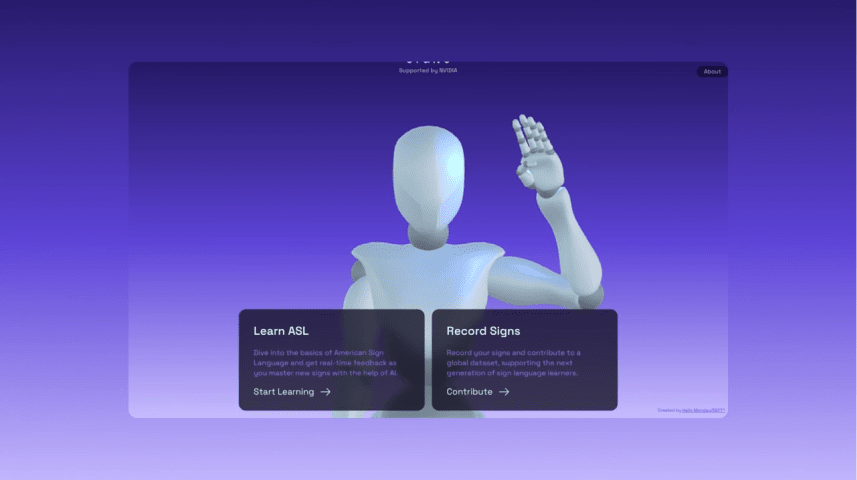

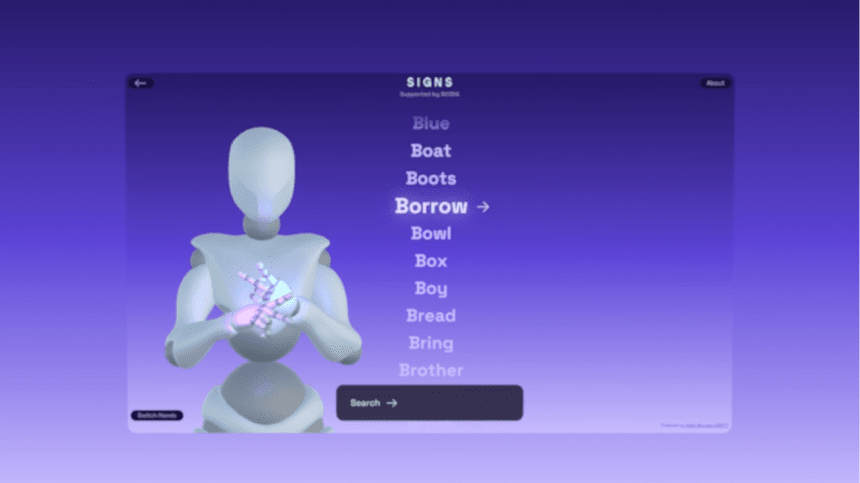

- Step-by-step guidance: a 3D avatar demonstrates each sign from multiple angles

- Real-time feedback: AI tracks the user’s hand movements, providing instant corrections

- A growing ASL database: users contribute videos of themselves signing, helping to train the AI and build an ever-expanding, open-source dataset

For the first time, learners don’t just watch—they interact. They get immediate, personalised feedback. They practice with confidence, knowing that every correction brings them closer to fluency.

And it’s working.

The impact

Signs launched with zero paid media—and still, the world listened.

- Featured on CNN, Axios, Venturebeat, and across global media.

- A rapidly expanding dataset fuelling the future of AI-powered sign language recognition.

+1b

earned impressions

+20m

people reached in the first week

+20,000

signs learned in 10 days

Building a global AI movement

Signs is not just teaching ASL—it’s shaping the future of AI-powered accessibility:

- 400,000+ video clips and 1,000+ signs in development.

- Continuous AI training, refining recognition of complex sign movements and expressions.

- A dataset made publicly available by NVIDIA, allowing researchers and developers to build the next generation of AI-driven accessibility tools—from real-time sign language translation in video conferencing to an AI assistant that understands sign language.

By opening up this dataset, Signs isn’t just helping individual learners—it’s fuelling a future where AI doesn’t just recognise sign language, but understands it.

Signs is free, open to all, and constantly improving.

- Learn – Start signing today at signs-ai.com

- Contribute – Record and upload signs to help expand the dataset

- Share – The more people use Signs, the smarter it becomes

“Ultimately, our goal is to connect families, friends, and communities by making ASL learning more accessible, while simultaneously enabling the creation of more inclusive AI technologies.

”Michael Boone, NVIDIA